Research – John Magee

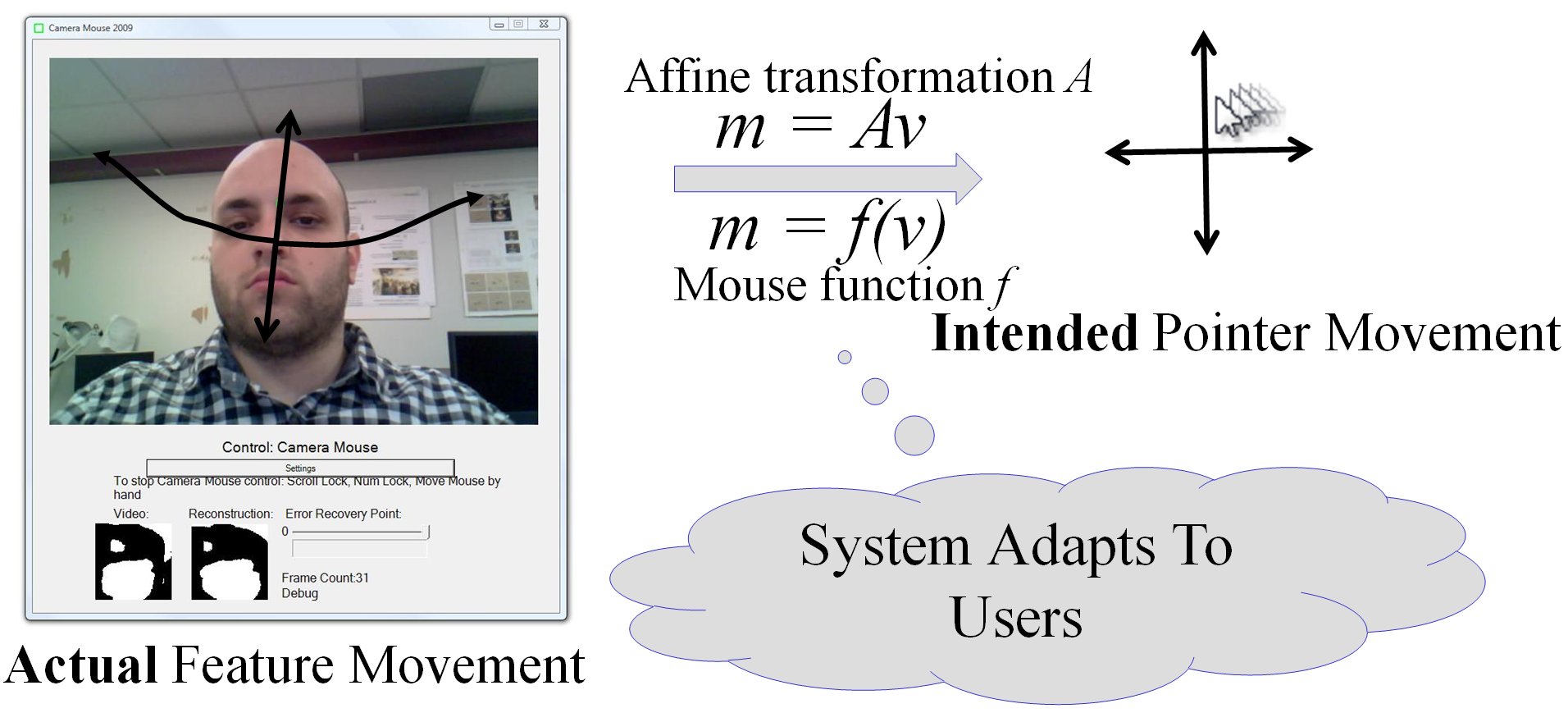

My primary research focus is in accessible computing, a sub-field of Human-Computer Interaction (HCI), where I investigate technologies that enable people with disabilities to communicate and participate socially in the world around them through computers. Computer Vision-based interactions are a particular interest. Specifically, much of my work involves creating and evaluating systems that allow people with motor impairments to control a computer mouse pointer.

Much of my research agenda emanates from a project called Camera Mouse, a computer vision-based interface that allows people with disabilities to control a mouse pointer with their head motions or by moving other body parts. Camera Mouse was developed by a team led by my Ph.D. advisor Margrit Betke at Boston University and James Gips at Boston College and has included my contributions over many years. The public version has over 3 million downloads, while a research version serves as a platform for research experimentation and evaluation of new features.

My research builds upon and advances the success of Camera Mouse and similar systems. Once users have the ability to control a mouse pointer with their head motion, numerous under-studied

research questions can be explored. Typically, users are required to adapt to the interfaces that they wish to use. I am particularly interested in

ability-based interfaces that change and adapt to the user and their individual abilities.

Current Projects

Target-Aware Dwell (aka Predictive Link Following)

|

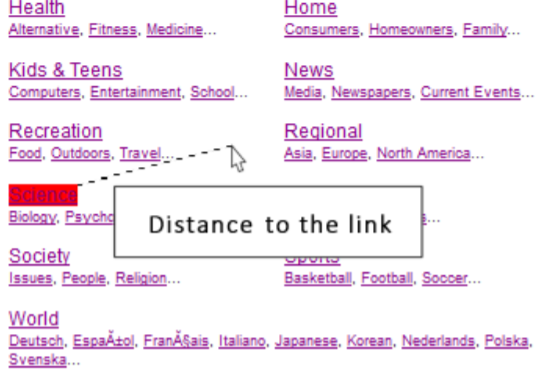

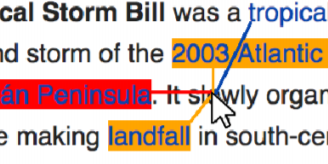

A current focus area is on click actuation within mouse replacement interfaces. The long-standing behavior of Camera Mouse has been a configurable dwell time selection - the user holds the mouse in a small area for a set amount of time, and the system generates a simulated mouse click. However, some users with motor impairments do not have the ability to hold the mouse pointer steady in a small area, causing them to misclick on targets. My students and I have developed a novel clicking technique called Target-Aware Dwell or Predictive Link. With many traditional clicking techniques, it can be difficult to click on small and cluttered targets such as links on a web page. Previous approaches have relied on target-agnostic adjustments (such as slowing the mouse velocity when the mouse changes directions) or static target-aware adjustments to the size of links (e.g. area cursors) or ways to pull the pointer toward a target. This new approach uses a prediction score that is a function of the distance from the mouse to a nearby link and the time spent near or over the link. The prediction score increases as you remain near a link, and decays over time as the pointer is away from a link. In this way, hovering around several links still allows the user to select a link by spending the most time near the link they intend to follow. Even if they cannot stop the mouse pointer over the link, it can still be selected. My students and I iteratively developed this technique and conducted initial evaluations of its performance. We have built the system into a Google Chrome extension that allows it to be downloaded and used on the most popular web browser. Conversely, most prior target-aware techniques have limited practical use because they can only be used within specific research software. More in-depth and long-term evaluations of this technique are in progress. |

J. Vazquez-Li, L. Pierson Stachecki, and J. Magee. Eye-Gaze With Predictive Link Following Improves Accessibility as a Mouse Pointing Interface. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS '16). 297-298. ACM. Reno, NV, October 2016. pdf, doi.org/10.1145/2982142.2982208

J. Roznovjak and J. Magee. 2015. Predictive Link Following for Accessible Web Browsing. Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility (ASSETS '15), 403-404. Lisbon, Portugal, October 2015. pdf, doi.org/10.1145/2700648.2811391

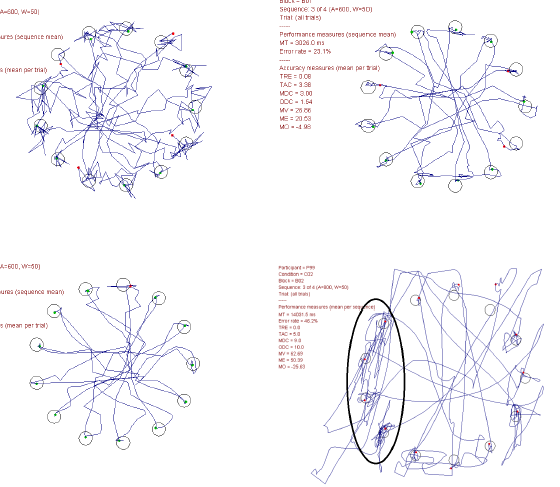

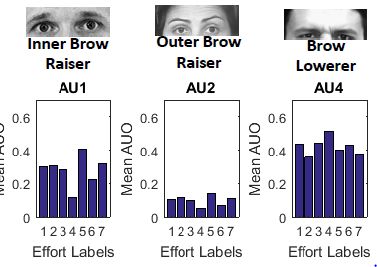

Fitts' Law Evaluation of Pointing Interfaces

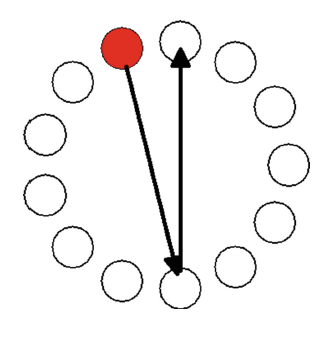

| I have conducted empirical evaluation of point-and-click interfaces using Fitts' law in collaboration with Scott MacKenzie and Torsten Felzer. Fitts' law relates the difficulty of performing a selection task from a pointer's current location to a target by the distance to the target and the size of the target. Intuitively, a target that is small and far away has higher difficulty, while a nearby or larger target has lower difficulty. For a single point-and-select task, the Index of Difficulty (ID) captures this difficulty in bits. A typical experiment measures Movement Time (MT) to perform the task (among other dependent variables such as error rates). Repeated trials provide data for statistical analysis of the pointing performance. A key measure is the ratio of ID/MT, or throughput, which has units of bits/second. Throughput is a measure of rate of communication, and describes how much information a user conveys to the computer system through the pointing interface. Importantly, the measure provides for cross-study comparison of results, so that different input methods can be compared (this is analogous to using words-per-minute to measure typing speed). Multiple prior studies have produced expected throughput measures for mouse, touchpads, trackballs, and other devices to serve as comparison benchmarks. Our experiments with people with disabilities allow us to compare the input and clicking method (primary independent variable) within user, across users, and across input methods. A collaboration on a Fitts' law evaluation with Felzer and MacKenzie used the Camera Mouse for pointing and compared the performance of dwell-time selection to selection via a physical headband device that Felzer had developed to actuate clicks with eyebrow raises. We found that our case study participant had higher dwell-time selection throughput, but the intentional click actuation was subjectively preferred as the user felt more in control of intentionally making the click happen. As a follow-up, I developed, with one of my students, a computer-vision eyebrow-raise selection mechanism and incorporated it into the research version of Camera Mouse to re-create the prior experiment and compare against the physical headband. A later collaboration compared two different approaches for using a keypad to perform mouse selections. |

T. Felzer, I.S. MacKenzie, and J. Magee. Comparison of two methods to control the mouse using a keypad. Proceedings of the 15th International Conference on Computers Helping People With Special Needs - ICCHP 2016, LNCS 9759, 511-518. Springer. Linz, Austria, July 2016. pdf, doi.org/10.1007/978-3-319-41267-2_72

J. Magee, T. Felzer, and I.S. MacKenzie. Camera Mouse + ClickerAID: Dwell vs. Single-Muscle Click Actuation in Mouse-Replacement Interfaces. HCI International / Universal Access in Human–Computer Interaction. HCII/UAHCI 2015. LNCS 9175, 74-84. Los Angeles, CA, August 2015. pdf, https://doi.org/10.1007/978-3-319-20678-3_8

Simulation of Motor Impairments in Pointing Interfaces

|

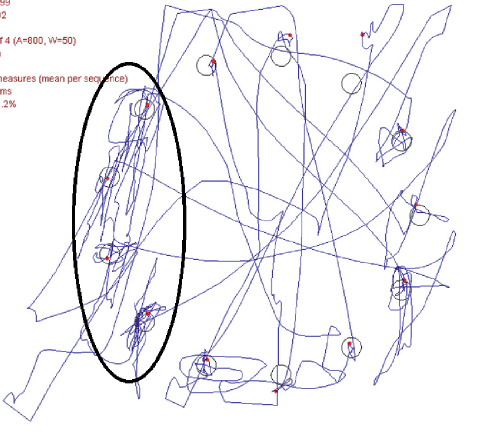

Use of research participants with motor impairments during initial development and testing of new interaction techniques is often impractical. When using Camera Mouse themselves, student researchers typically have better control of the pointer than our intended end users. This has led to an interesting direction of research - can we simulate the abilities of a person with motor impairments for the purpose of developing and testing interaction methods? The previous Fitts' law experiments had recorded mouse movement trajectories and click coordinates for a number of participants, with and without disabilities. Analysis of mouse trajectories showed that involuntary motions likely contributed to lower throughput. My students and I developed an initial proof-of-concept to simulate pointer control abilities of a user with motor impairments. The initial concept involved adding additional random mouse movements to the actual movements to simulate unintentional movement and was built into the research version of Camera Mouse. We conducted a Fitts' law experiment while running the simulation and compared results to the previously recorded data. I believe this approach is promising and we are continuing to study it. A more speculative future direction will be to use the recorded mouse trajectories from several users with disabilities as a dataset to train machine learning systems to perform the simulations. A good simulation tool would have wide-ranging research implications (assistance in developing systems) and could also be used by commercial software developers to test the accessibility of their own interfaces. |

MathSpring - Intelligent Math Tutor Project

|

My collaborations on a recently funded NSF grant (IIS-1551590) enables me to leverage my work in accessible computing in a new domain. The main project involves MathSpring, a web-based "smart math tutor" that has been developed at UMass by Beverly Woolf and WPI by Ivon Arroyo. The existing project models a student's affect and engagement by measuring correct vs. incorrect responses and occasionally polling the student directly. My contributions expand upon that by incorporating mouse pointer analysis and, working with collaborators at Boston University, utilizing computer vision techniques to measure facial expressions as additional indicators of student affect and engagement. The smart tutor's avatar intervenes to help the student persist through challenges in their learning, and our system will notice improvements based on those interventions. At Clark we collected the initial dataset for this new work. We recorded four data streams of students using the math tutor: two video streams (laptop webcam and a secondary GoPro to capture their facial expressions while they look down to work on scrap paper), the screen video and audio, and the mouse pointer trajectories and clicks. My students and I are currently analyzing the mouse movement data and are incorporating it as an additional input feature to the machine learning system. The mouse trajectory analysis uses techniques that are both target-agnostic (e.g. velocity, direction changes, entropy) and target-aware (is the mouse pointer moving near the question text, the "hint" button, the answer buttons). |

This work is supported by NSF grant IIS-1551590 INT: Collaborative Research: Detecting, Predicting and Remediating Student Affect and Grit Using Computer Vision

Community-Based Research with Seven Hills Foundation

I have an ongoing collaboration with Seven Hills Foundation in Worcester. Seven Hills manages several programs for people with disabilities and older adults (e.g. adult day program, a pediatric residential facility). With their collaboration, I am currently seeking funding for longitudinal studies involving Camera Mouse and the software I have developed. |

Earlier Projects

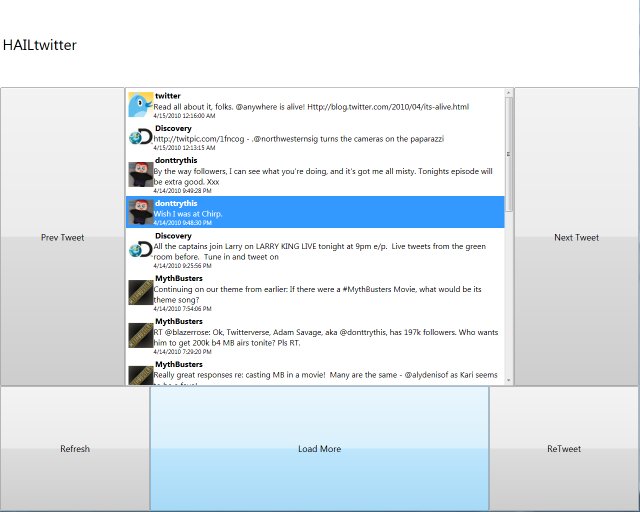

Hail: Hierarchical Adaptive Interface Layout

|

HAIL is a framework to adapt software to the needs of individuals with severe motion disabilities who use mouse substitution interfaces. The Hierarchical Adaptive Interface Layout (HAIL) model is a set of specifications for the design of user interface applications that adapt to the user. In HAIL applications, all of the interactive components take place on configurable toolbars along the edge of the screen. Paper: pdf. Abstract. |

Adaptive Mouse Functions

|

Traditional approaches to assistive technology are often inflexible, requiring users to adapt their limited motions to the requirements of the system. Such systems may have static or difficult-to-change configurations that make it challenging for multiple users at a care facility to share the same system or for users whose motion abilities slowly degenerate. Current technology also does not address short-term changes in motion abilities that can occur in the same computer session. As users fatigue while using a system, they may experience more limited motion ability or additional unintended motions. To address these challenges, we propose adaptive mouse-control functions to be used in our mouse-replacement system. These functions can be changed to adapt the technology to the needs of the user, rather than making the user adapt to the technology. Paper: pdf. |

Multi-camera Interfaces

|

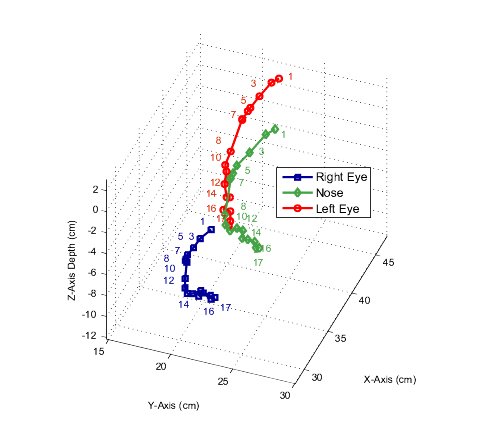

We are working on a multi-camera capture system that can record synchronized images from multiple cameras and automatically analyze the camera arrangement. In a preliminary experiment, 15 human subjects were recorded from three cameras while they were conducting a hands-free interaction experiment. The three-dimensional movement trajectories of various facial features were reconstructed via stereoscopy. The analysis showed substantial feature movement in the third dimension, which are typically neglected by single-camera interfaces based on two-dimensional feature tracking. Paper: pdf. |

EyeKeys: An Eye Gaze Interface

|

Some people are so severely paralyzed that they only have the ability to control the muscles that move their eyes. For these people, eye movements then become the only way to communicate. We developed a system called EyeKeys that detects whether the user is looking straight, or off to the left or right side. EyeKeys runs on consumer-grade computers with video input from inexpensive USB cameras. The face is tracked using multi-scale pyramid of template correlation. Eye motion is determined by exploiting the symmetry between left and right eyes. The detected eye direction can then be used to control applications such as spelling programs or games. We developed the game “BlockEscape” to gather quantitative results to evaluate EyeKeys with test subjects. Paper: Abstract. Pdf file. Video. |

Acknowledgements

This research is based upon work supported by the National Science Foundation (NSF) under Grants 0910908, 0855065, 0713229, 0093367. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.